Ethical AI Systems: The Importance of Fairness, Transparency, and Accountability

- Zaineb Rani

- Oct 20, 2024

- 4 min read

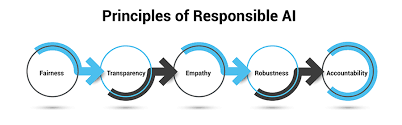

Artificial Intelligence (AI) is reshaping our world, making it crucial to ensure ethical principles guide its development and deployment. Among the core values for ethical AI systems are fairness, transparency, and accountability. These principles are vital to building trust in AI technology, but achieving them involves complex challenges. This blog will discuss the significance of these principles, the obstacles in implementing them, and provide a framework for ethical AI system development.

The Need for Fairness, Transparency, and Accountability in AI

To understand why these principles are essential . For example, if an AI system determines creditworthiness or job eligibility, the outcome could significantly impact someone's life. The concept of fairness requires that the AI decision-making process avoids bias and treats all individuals equitably. Transparency ensures users understand how AI makes its decisions, while accountability involves responsibility for the outcomes AI produces. These principles collectively aim to create AI systems that are not only technically advanced but also socially beneficial.

The Challenges of Achieving Fairness, Transparency, and Accountability

Despite the importance of these values, there are significant challenges in making AI systems truly fair, transparent, and accountable:

Bias in Data and Algorithms

AI systems often learn from historical data, which may contain biases. These biases can lead to unfair treatment of certain groups if not addressed. Developing algorithms that detect and mitigate bias is challenging, particularly in complex datasets.

Black Box Nature of AI Models

Some AI models, especially deep learning algorithms, function like "black boxes," where the decision-making process is not easily interpretable. This lack of transparency can make it difficult to understand why a particular outcome was reached.

Data Privacy Concerns

Using personal data in AI systems raises issues regarding data privacy. Ethical AI development requires ensuring data is collected, stored, and used responsibly, adhering to legal standards while respecting individuals' privacy rights.

Accountability Gaps

Determining who is responsible for AI outcomes is a complex issue. If an AI system makes a harmful decision, is the blame on the developers, the organization that deployed the AI, or the AI system itself? This ambiguity creates challenges in establishing accountability.

Transparency in AI

Transparency is fundamental to ethical AI development. It involves making the AI's decision-making process clear and understandable to users and stakeholders. Key aspects include:

Explainability: Ensuring AI decisions can be interpreted and explained in simple terms, allowing users to understand why a specific decision was made.

Data Disclosure: Being open about the data used to train AI models, including its sources, quality, and any known limitations.

Algorithmic Transparency: Sharing the logic or rules behind the algorithms, though it is often a challenge to balance transparency with protecting proprietary technologies.

Data Privacy in AI: Balancing Utility and Protection

Handling data responsibly is essential to building trust in AI systems. Data privacy involves:

Secure Data Handling: Ensuring data is encrypted, anonymized, and stored securely to prevent unauthorized access.

Data Minimization: Collecting only the data that is necessary for the AI's functionality to limit exposure of personal information.

User Consent and Control: Allowing users to consent to data collection and offering them control over their data, including the right to have their data deleted.

Accountability and Liability

Accountability means assigning responsibility for AI's decisions and their consequences. To achieve this:

Clear Governance Frameworks: Organizations must establish guidelines that outline responsibilities for AI development and deployment.

Monitoring and Auditing: Regularly evaluating AI systems to detect and rectify errors or biases that could harm users.

Liability Provisions: Having legal structures in place to address liability in cases where AI decisions cause harm. This can include insurance or compensation mechanisms.

Framework for Developing Ethical AI Systems

To build an ethical AI system that adheres to fairness, transparency, and accountability, a structured framework is required:

Design Stage

Bias Detection: Incorporate tools and methods for detecting biases in data during the design phase.

Diverse Teams: Include diverse perspectives in the development team to identify potential biases early.

Development Stage

Algorithm Testing: Continuously test algorithms for fairness and transparency. Implement explainability tools to interpret complex models.

Privacy Measures: Use anonymization techniques and secure data handling protocols to protect users' privacy.

Deployment Stage

User Training: Provide training for end-users to understand AI outputs and decision-making processes.

Feedback Mechanisms: Enable feedback loops for users to report discrepancies or unexpected outcomes.

Monitoring and Maintenance

Regular Audits: Conduct regular audits to ensure AI systems remain ethical throughout their lifecycle.

Updates and Revisions: Continuously update AI models to reflect new data, user feedback, and ethical standards.

Case Study

Implementing Ethical AI in Healthcare

An example of ethical AI in action is in the healthcare sector, where AI models assist in diagnosing diseases. Ensuring fairness means that the model must perform equally well across different demographic groups. Transparency involves explaining the model's diagnosis reasoning to doctors and patients, while accountability requires that medical professionals validate AI-generated recommendations.

Ethical AI Framework

To evaluate whether an AI system aligns with ethical standards, consider the following test module:

Bias Detection Test

Objective: Ensure the model does not produce biased outcomes.

Procedure: Run the model against diverse datasets and compare the performance across different demographic groups.

Transparency Test

Objective: Assess the explainability of the model's decisions.

Procedure: Use explainability tools like SHAP or LIME to explain sample decisions and validate whether non-technical users can understand them.

Privacy Protection Test

Objective: Verify that personal data is protected.

Procedure: Conduct security checks on data encryption, access controls, and anonymization processes.

Accountability Test

Objective: Determine who is responsible for AI decisions.

Procedure: Review governance documentation and incident response plans to ensure clear accountability is defined.

Wrapping Up

Building ethical AI systems is essential to harnessing the benefits of AI while minimizing potential harms. Fairness, transparency, and accountability should not be afterthoughts but core principles embedded throughout the AI lifecycle. By establishing a robust framework for ethical AI, we can create systems that respect human rights and promote trust in AI technologies.

#EthicalAI #FairnessInAI #Transparency #Accountability #AIPrivacy #DataEthics #AIForGood #ResponsibleAI #AITrends #TechForGood

Comments